Ten years ago, a client asked me: 'How do our product content changes impact team responsiveness? Who's performing well as a product content moderator?'

I stared at their beautifully configured Pimcore system. Product attributes perfectly modelled, classifications elegantly structured, workflows thoughtfully designed. We could tell you everything about their products, but nothing about their product experience performance.

Their marketing team was doing what marketing teams do - running experiments. Testing new product positioning, trying different content approaches, iterating on messaging. But we had no way to track how these experiments affected operational quality or team performance.

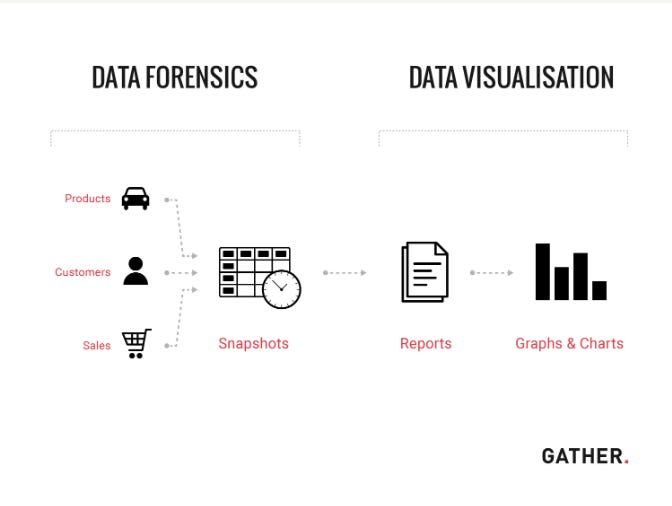

So, after some theory crafting, and with the idea of re-usability in mind I built a prototype to achieve some basic functions for them. Later we iterated on it and demoed it as a concept at a Pimcore conference in 2016. It had dashboards, root cause analysis, could tell you when things changed and why, and what’s more, it was a highly configurable framework.

Then it sat there, collecting dust for years…

Enter PXM

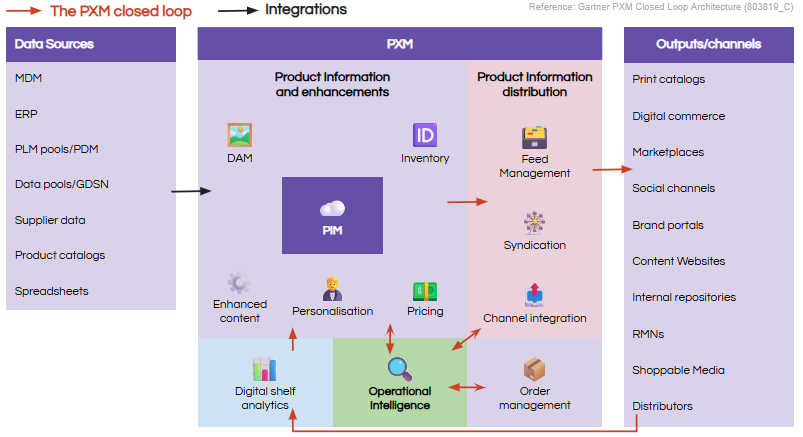

Fast forward to today, and I'm looking back into this ecosystem with fresh eyes. Product Experience Management has evolved remarkably. We now have sophisticated content enhancement capabilities, digital shelf analytics monitoring product performance across marketplaces, and AI-powered content generation enabling rapid experimentation at unprecedented scale.

PXM platforms excel at content performance analytics - tracking syndication success, channel optimisation, and market presence. Digital shelf analytics has emerged as a core capability. System observability is commonplace too, but lives elsewhere entirely - that's the domain of Datadog and New Relic.

Here's what kept bothering me… we spend enormous effort modelling product data. Attributes, relationships, classifications. We make it configurable, flexible, business-owned, but how do we track compliance trends with the same sophistication? How do we monitor stock level impacts systematically? How do we measure margin performance over time?

With 75% of marketers now using AI for content creation, we're automating faster than we can validate. AI should enable more creative risk-taking, but surely there's a risk here - if you can't trust what gets generated at scale, how do you maintain experimental courage?

To illustrate this, I ran a simple experiment: I asked ChatGPT to recreate the Starbucks logo repeatedly, using each iteration as the source for the next.

The results are telling - while each individual recreation looked 'close enough', by the tenth iteration the siren's face had lost definition, text spacing had shifted (see the F in coffee), and proportions had drifted significantly from the original. This is AI drift in action: small errors compound invisibly until brand integrity is compromised.

This multiplication effect where experimental failures scale faster than experimental insights is exactly why operational intelligence becomes critical. Every test becomes a potential compliance risk. Every creative iteration could introduce systematic errors across thousands of products.

What's missing from the PXM space is “intelligence” about data operations - the ability to monitor content quality, process integrity, and compliance standards in real-time. The same concept we were exploring years ago, now essential for experimental confidence.

Marketing teams need space for chaos, spontaneity, and play - these drive breakthrough campaigns and customer insights. But without operational intelligence, every experiment becomes a potential operational risk rather than a learning opportunity.

If we can model products this well, why can't we model product experience performance? How do we make compliance tracking as configurable as product classifications? How do we put margin alerts right next to pricing data? There's nothing stopping us from modelling business KPIs directly next to our product information. It feels like the right place for this data to sit, rather than orbiting in some shallow best in class off-the-shelf SaaS solution; large data gravity isn’t always a bad thing, if it’s the right data.

The Prototype Revisited

As an engineer, this challenge fascinates me. The intersection of creative experimentation and operational confidence sits right at the heart of what makes systems truly valuable. Watching this gap persist for years, I've decided to take the (staying space themed) Mark Watney approach: science the shit out of Product Experience Management.

I've dusting off that 2016 prototype and given it a fresh Pimcore Studio perspective. The modelling capabilities that seemed ahead of their time for PIM might be exactly what PXM needs now. Here’s a sneak preview

I'll be developing this further and seeking input from other agencies and developers to get their take on what operational intelligence should look like in practice in the PXM space.

Bonus: There's also broader research I'm doing into AI and creativity - whether these systems actually enable innovation or just make everything converge toward safe mediocrity. That's a different conversation entirely, but the operational confidence question sits right at the heart of it. Stay tuned.

How do you balance experimental marketing with operational confidence? What creative constraints are you navigating as AI scales?